Python's relationship with Memory

In our previous letter we went over how Python acts like a business analyst and helps translate code written in high level language to lowest level machine understandable set of instructions. With machine now coming into the picture, we should understand, how its resources are made use in real time.

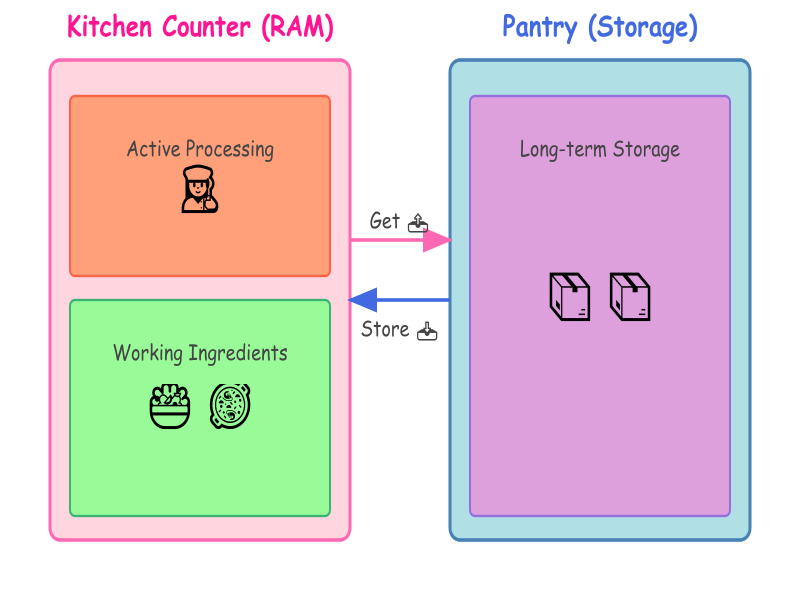

Python preparing an outcome/job/task for us is similar to say we humans preparing food in our kitchen. When we decide to cook something, its based on the ingredients we already have stored in our pantry, and while we perform the task of cooking itself we take the amounts to the extent required and use our counter space. Its not much different with Python- basically data is stored in disk space (kitchen pantry), and data required to performs tasks is brought into our RAM or memory (kitchen counter).

the Kitchen counter: This is like the RAM of your machine. The moment we run our python script, it becomes an active process where the bunch of machine understandable set of instructions will start executing themselves- just like the chef will open up a cooking station at the kitchen and clear the counter space required to start working on the ingredients. Right here inside the RAM all the calculations, transformations, processing takes place- similar to cleaning, chopping, marinating those ingredients.

The Pantry: its like the disk storage (SDD or HDD) inside the machine. This is where the data resides or rather occupies space even when it is not being used for any process. Say for example your Python script needs an ingredient (a csv file on your hard drive) to arrive at an outcome or analysis, so it goes to the location of your disk specified, to pick up a csv file and bring it into the RAM storage.

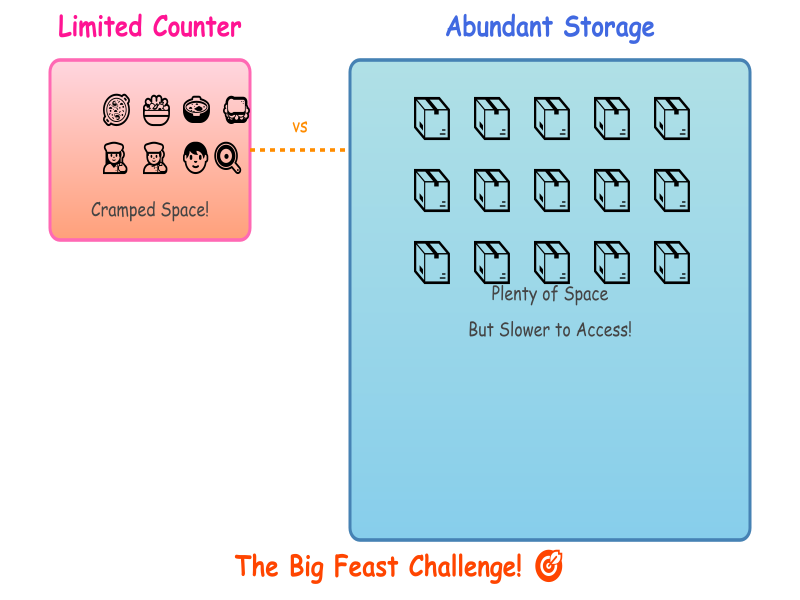

Now here's the real world challenge- say you are preparing a feast for 1000 people. Given the small kitchen counter this becomes intense- ain't it! Similarly if say your machine has to process a large dataset on the limited RAM space, it has to work there with a lot of constraints. This RAM space is just like our premium counter space- premium, required for faster processing, but limited. On the other hand our pantry those SSD/HDD are massive- non premium, slow to access, but comes in abundance!

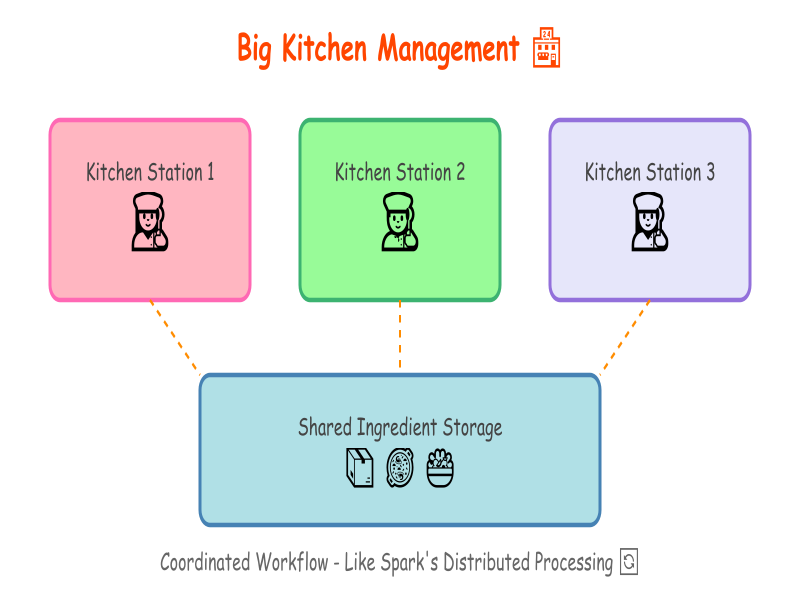

This is why data engineers are required to move beyond basic Python, and employ logical frameworks for distributing, and coordinating the work across multiple chefs with smaller counter spaces- enters Apache Spark, DuckDB (don't worry about the nomenclature, we will dissect them as we move ahead! so chill!!..). These help us work with big data (big data? A simple answer: any size that chokes the RAM- no particular size can be labelled as big!..), or larger datasets by managing the distribution of tasks, and coordinating the traffic between disc and memory i.e. the RAM.

Now this understanding of orchestrating data movements between the disc and memory is extremely crucial for us as data practitioner and engineers! This isn't any longer about just writing a few lines of Python code, its about understanding how to add efficiency by managing the traffic size & distribution across data lanes. Hence we ought to stress on these,

- when to employ distributed systems vis-a-vis just basic python

- how to chunk the data to ensure that there are no memory overflows

- the best tools there are for managing different processing use cases/scenarios

Alright, before we dive deeper into Spark or DuckDB, it makes sense to elaborate and cover just the essentials of Python in some detail, and then start having fun with tools etc. Stay tuned with the next issue, and subscribe if you haven't yet!

And remember, whenever you run a python script, you are putting up a show or leading an orchestra of computational resources on your machine. Somewhere down the line we will introduce ourselves to 'memory_profiler'- it's like a kitchen dashboard for monitoring the use of the counter space. Will be interesting to see memory management in action!

Till then, happy reading, and have a great time ahead! Cheers!